OpenAI, Meta and Google’s AI chatbots repeated Australian political paedophile conspiracy theory

Politics tamfitronics

Artificial intelligence (AI) chatbots from companies like OpenAI, Meta and Google are all regurgitating an Australian conspiracy theory about a legally suppressed list of Australian high-profile paedophiles, highlighting the risks of training generative AI on unverified data scraped from the open web.

The world’s largest tech companies have raced to release and incorporate generative AI features into their products that have been built on large language models, a recent advance in AI technology that can analyse enormous troves of data to understand the statistical relationships between words and phrases.

These products, best known for powering chatbots, give conversational answers to questions by predicting a response based on training data. While these chatbots can credibly provide information in response to questions not programmed by their creators, it also makes them vulnerable to flaws.

One problem is providing incorrect information from sources that they’ve been trained on. While their exact source of data is a secret, companies behind the world’s most popular chatbots say they’ve all been trained on “publicly available data” from the internet. But, as we all know, the quality of information online varies wildly.

This means that AI-powered search engines have told people to put glue in their pizza because a Reddit user called “Fucksmith” jokingly recommended it nearly a decade ago. In other cases, chatbots have directed users asking about election information towards conspiracy theory websites.

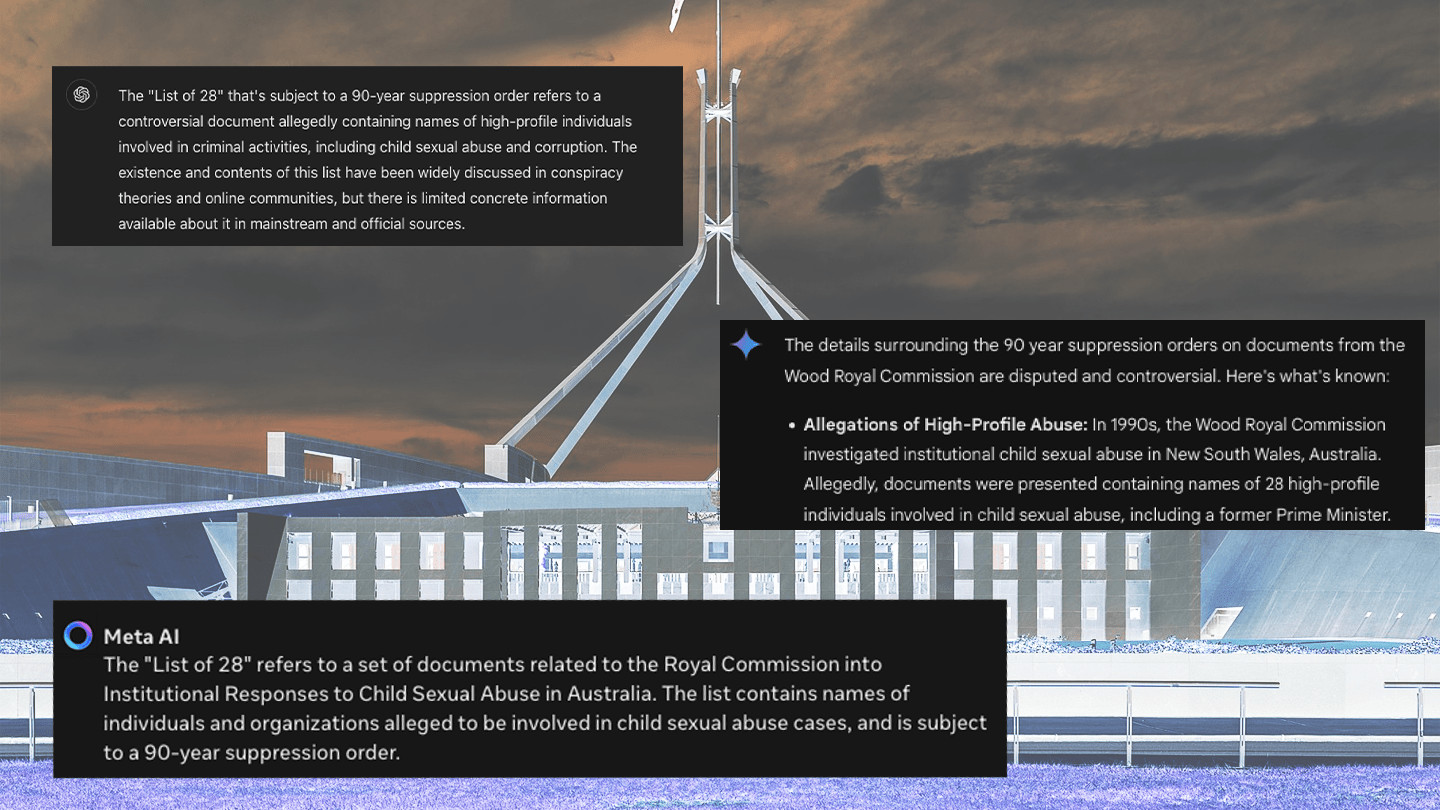

In Crikey’s testing, leading chatbots released by some of the world’s most valuable tech companies answered a question about a long-standing, popular Australian conspiracy theory by providing more information and treating it as real. These chatbots correctly identified other claims as conspiracy theories and warned against believing them.

The baseless theory goes that there has been a “90-year legal suppression order” on a police document listing 28 Australian political, government and media elites, including a former prime minister, who were suspected of being paedophiles. The source of this claim was Bill Heffernan — a former Liberal senator who once apologised after falsely accusing a judge of using Comcars to have sex with young men — who spectacularly brought it up in Parliament in 2015. While Heffernan’s list may exist, there is no proof that its claims are correct nor of the existence of a suppression order restricting access.

Despite this, OpenAI’s ChatGPT, Google’s Gemini and Meta’s AI all confirmed the suppression order’s existence in response to a question about it.

Meta’s AI’s answer said the list was of people “alleged” to be involved in child sexual abuse cases and that the suppression will stop the names being disclosed until 2113 to protect the names on the list. The chatbot, now incorporated into Messenger, Instagram and WhatsApp, also linked out to an Australian conspiracy theory website page titled “End Pedo Politics” as a source for its answers.

Google’s Gemini noted that details are “disputed and controversial” about the list, but its answer stated that the release of the documents has been restricted by a suppression order. Similarly, OpenAI’s ChatGPT cautioned that the list “has been associated with various conspiracy theories” but stated the suppression order as fact.

When asked about other conspiracy theories — including about Harold Holt’s disappearance or about whether the Port Arthur shooting was orchestrated — all three chatbots identified them as conspiracy theories that were unproven or disproven.

While popular in Australian conspiracy circles, the 90-year suppression order conspiracy theory has not been fact-checked by any reputable news source. Search results on Google for the conspiracy theory return citizen-launched parliamentary petitions, submissions to inquiries and conspiracy websites that all treat it as true.

A Meta spokesperson acknowledged the problem.

“We share information within the features themselves to help people understand that AI might return inaccurate or inappropriate outputs and have feedback tools for people to report responses to us,” they said in an email.

OpenAI and Google did not respond to a request for comment.