AI-generated news is unhuman slop. Crikey is banning it

Top Stories Tamfitronics

Crikey’s first-ever edition arrived in inboxes on Valentine’s Day, February 14, 2000. It included a reflection on Lachlan Murdoch from his former philosophy tutor, pseudonymous insider gossip from the former political staffer Christian Kerr, and lists of journalists who’d previously worked in politics. There was a profile of Rupert Murdoch lieutenant Col Allan titled “Pissing in the Sink”.

From our inception, we have been so very Crikey. There are a lot of things that make up Crikeyness, but central is its humanness. Like most people, we want to cut through the bullshit, know how the world and power works, hear the gossip, tell stories, take the piss and have fun. We write the way we wish other people would write, which is the way we talk to each other, for our readers.

In the near quarter-century of dispatches — during which newsletters went from being cool, to cringe, back to cool and is now swinging back towards cringe again —Crikey has remained remarkably consistent because our job didn’t change. We didn’t pivot to iPad or video. We just kept doing the same old Crikey: having people write about people for other people. Fundamentally, this is what we think journalism is about.

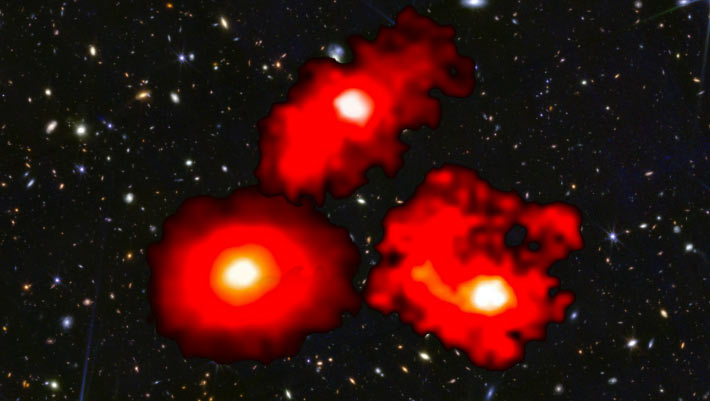

But there is a threat to this philosophy. Artificial intelligence, specifically advances in generative AI, has been the defining tech trend of the past 18-odd months. Every industry has been experimenting with how to use the technology, including the media. Predictably, some are already using it to create the news. While some outlets have been caughtpublishing AI-written articleswithout disclosure, others are openly publishingarticlesandartworkmade by a machineeven though it makes most Australians uneasy.

Our official position at Crikey is that this stinks. To understand our revulsion, you need to understand how generative AI, specifically large language models, work.

The first step in creating one of these models is hoovering up a huge amount of data. Most data is ripped from the internet without permission en masse. Companies that do this have ingested lots of intellectual property belonging to people who did not agree to being sucked into someone’s AI-generated, low-quality information and even some illegal and abhorrent images.

The next step is training on the data to understand the relationships between all the words and phrases. For example, if you input “the founder of Crikey is”, the most common response among its dataset is probably something like “Stephen Mayne”, which is true. But AI doesn’t draw on years of close observation of Australian media when it answers this question. It makes a calculation based on all the times other people have continued that sentence to come up with its response.

The point is that AI does not understand what it’s being asked to do. It doesn’t know what words mean. It does not think or care about who’s reading what it’s putting out. It certainly does not consider the different angles of a story and who stands to gain from accepting the spin. It’s mindless regurgitation, like a press gallery journalist repeating a politician’s promise without interrogating it.

There are a lot of other reasons to dislike the AI hype and its industry of boosters. It’s a hugely energy-intensive technology that requires incredible amounts of power to use and train, significantly more than other computing methods to complete the same task like searching the web.

It produces bullshit — not our words, bullshit is a scientific, technical term — when it frequently and confidently tells lies. Even when it tells the truth, its answers have racial and sexual biases.

The technology can’t be separated from its unscrupulous creators. The industry’s original sin was stealing the world’s data without permission. Everything it produces is fruit from the poisonous tree. Companies say you can opt out of having your data collected, but only told us this after they got their fill of information. And sometimes these companies lie about letting you opt out.

These companies have grown rich and fat by selling subscriptions and advertising on the data they stole from us. This digital colonial mindset continues in AI’s users, too. They use it to reproduce our artour photographs, our writing and our facesall without permission and often to our displeasure.

No matter how advanced it gets, AI can never do the work of journalism. It can’t pick up the phone, go somewhere, speak to people, or think of a new angle or problem or solution. That doesn’t mean it can’t be used as a poor substitute. As we’ve already seen in Australiapeople are already using AI to rip off other people’s journalism for their own gain and to compete against real stuff. They steal the eyeballs and dollars required to do the work that they think is valuable enough to take for themselves.

The current state of AI is an industry built around a wasteful, violating technology used to create poor imitations of work, riddled with errors and biases, that threaten the very systems that produce the information from which they seek to extract value.

That’s a good reason to boycott it, but our reason is simpler: AI-generated content is bad to read and see. It’s bland and beige. It’s slop that doesn’t stand up to scrutiny but is a close enough approximation to something real that it can fill up space where people don’t pay attention. It’s something that someone didn’t care enough about to spend time to write or create.

It is the opposite of what Crikey is. We believe that AI can never replicate our essential Crikeyness. A thousand chatbots in a thousand years would never come up with a title as evocative, incisive or beautiful as “Pissing in the Sink”. Using AI to generate Crikey would be an insult to you, the reader, as well as everyone who has made Crikey what it is. We’ve fiddled with AI image generation and the occasional block of text as a proof of concept in the past, and our editorial guidelines already require that any AI-generated content is disclosed. But we want to go a step further.

Crikey will never use AI to create what you read and see from us. We will spend the time writing the drafts, we will make the calls, we will read the documents, we will scheme for ways to piss off the right people. We will do the hard, time-consuming, expensive work — because if we didn’t, we wouldn’t be doing what our audience deserves. We join a movement of people rejecting AI-generated content to fight for the real stuff, and we invite other news organisations to join us.

We promise that everything you read and see in Crikey is made by a real person who gives a shit.

Want human-generated news? Subscribe to our free newsletters. Do you support our policy on artificial intelligence? Let us know your thoughts in the comments or by writing to[email protected]. Please include your full name to be considered for publication. We reserve the right to edit for length and clarity.

Hot Deals

Hot Deals Shopfinish

Shopfinish Shop

Shop Appliances

Appliances Babies & Kids

Babies & Kids Best Selling

Best Selling Books

Books Consumer Electronics

Consumer Electronics Furniture

Furniture Home & Kitchen

Home & Kitchen Jewelry

Jewelry Luxury & Beauty

Luxury & Beauty Shoes

Shoes Training & Certifications

Training & Certifications Wears & Clothings

Wears & Clothings