What’s next in chips

Technology tamfitronics

How Enormous Tech, startups, AI devices, and substitute wars will remodel the means chips are made and the technologies they power.

MIT Skills Assessment’s What’s Subsequent sequence looks to be across industries, trends, and technologies to provide you with a first glimpse on the future. You might possibly almost definitely well almost definitely almost definitely read the relaxation of themhere.

Thanks to the boost in man made intelligence, the enviornment of chips is on the cusp of an big tidal shift. There might possibly be heightened quiz for chips that can utter AI devices sooner and ping them from devices relish smartphones and satellitesenabling us to utilize these devices with out disclosing deepest recordsdata. Governments, tech giants, and startups alike are racing to gash out their slices of the rising semiconductor pie.

Listed below are four trends to glimpse for within the one year forward that will elaborate what the chips of the future will glimpse relish, who will bear them, and which unusual technologies they’ll unlock.

CHIPS Acts across the enviornment

On the outskirts of Phoenix, two of the enviornment’s very most life like chip manufacturers, TSMC and Intel, are racing to bear campuses within the desolate tract that they hope will change into the seats of American chipmaking prowess. One ingredient the efforts have in general is their funding: in March, President Joe Biden announced $8.5 billion in explain federal funds and $11 billion in loans for Intel’s expansions across the nation. Weeks later, another $6.6 billion used to be announced for TSMC.

The awards are appropriate a portion of the US subsidies pouring into the chips industry by project of the $280 billion CHIPS and Science Act signed in 2022. The money implies that any firm with a foot within the semiconductor ecosystem is examining the correct technique to restructure its provide chains to rob pleasure within the money. Whereas extra special of the money aims to enhance American chip manufacturing, there’s room for other gamers to use, from instruments makers to niche materials startups.

But the US is rarely any longer the most efficient nation attempting to onshore some of the major chipmaking provide chain. Japan is spending $13 billion on its rep the same to the CHIPS Act, Europe will seemingly be spending more than $47 billion, and earlier this one year India announced a $15 billion effort to bear local chip vegetation. The roots of this pattern proceed your complete means abet to 2014, says Chris Miller, a professor at Tufts University and author of Chip Battle: The Fight for the World’s Most Necessary Skills. That’s when China began offering large subsidies to its chipmakers.

SIMON & SCHUSTER

“This created a dynamic in which other governments concluded they’d no different but to give incentives or encounter companies shift manufacturing to China,” he says. That threat, coupled with the surge in AI, has led Western governments to fund imaginable choices. Within the following one year, this might possibly possibly almost definitely well have a snowball perform, with even more nations starting their very rep programs for fright of being left within the abet of.

The money is rarely any longer seemingly to manual to brand-unusual chip opponents or essentially restructure who the very most life like chip gamers are, Miller says. In its place, it’ll largely incentivize dominant gamers relish TSMC to place roots in a pair of countries. But funding by myself obtained’t be sufficient to form that quick—TSMC’s effort to bear vegetation in Arizona has been mired in left out slice-off dates and labor disputes, and Intel has in an analogous contrivance didn’t fulfill its promised slice-off dates. And it’s unclear whether or no longer, on every occasion the vegetation form near online, their instruments and labor power will seemingly have the ability to the the same level of developed chipmaking that the agencies retain out of the country.

“The provide chain will easiest shift slowly, over years and decades,” Miller says. “But it no doubt is transferring.”

More AI on the edge

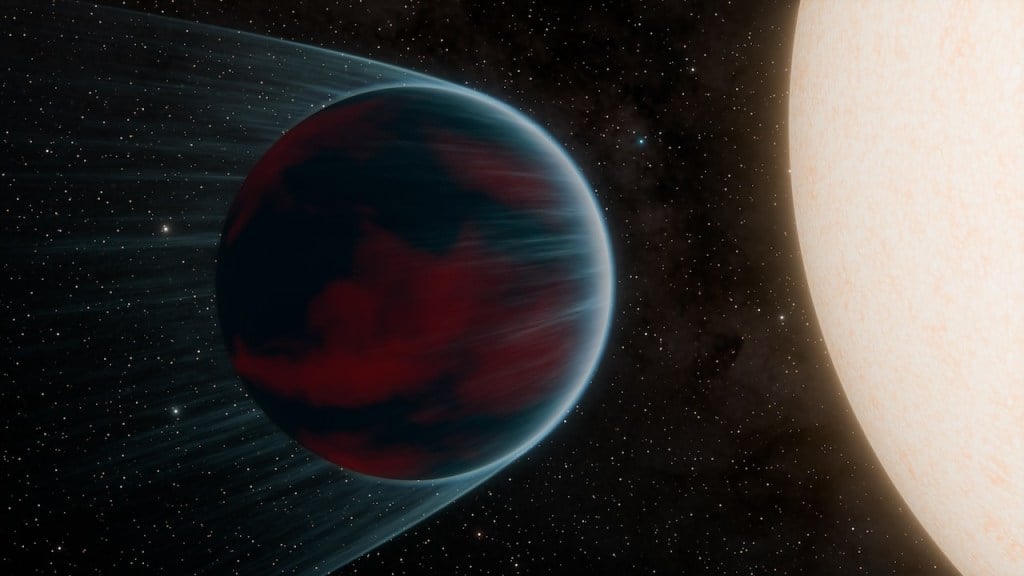

Currently, most of our interactions with AI devices relish ChatGPT are performed by project of the cloud. Meaning that whilst you happen to quiz GPT to make a selection an outfit (or to be your boyfriend), your seek recordsdata from pings OpenAI’s servers, prompting the mannequin housed there to project it and blueprint conclusions (identified as “inference”) before a response is disbursed abet to you. Counting on the cloud has some drawbacks: it requires cyber internet access, for one, and it also means some of your recordsdata is shared with the mannequin maker.

That’s why there’s been loads of ardour and funding in edge computing for AI, where the technique of pinging the AI mannequin occurs straight on your instrument, relish a pc or smartphone. With the industry an increasing number of working towards a future in which AI devices know a lot about us (Sam Altman described his killer AI app to me as one which knows “absolutely all the pieces about my complete life, every e mail, every conversation I’ve ever had”), there’s a quiz for sooner “edge” chips that can poke devices with out sharing deepest recordsdata. These chips face a lot of constraints from those in recordsdata products and companies: they most frequently must be smaller, more cost-effective, and more vitality efficient.

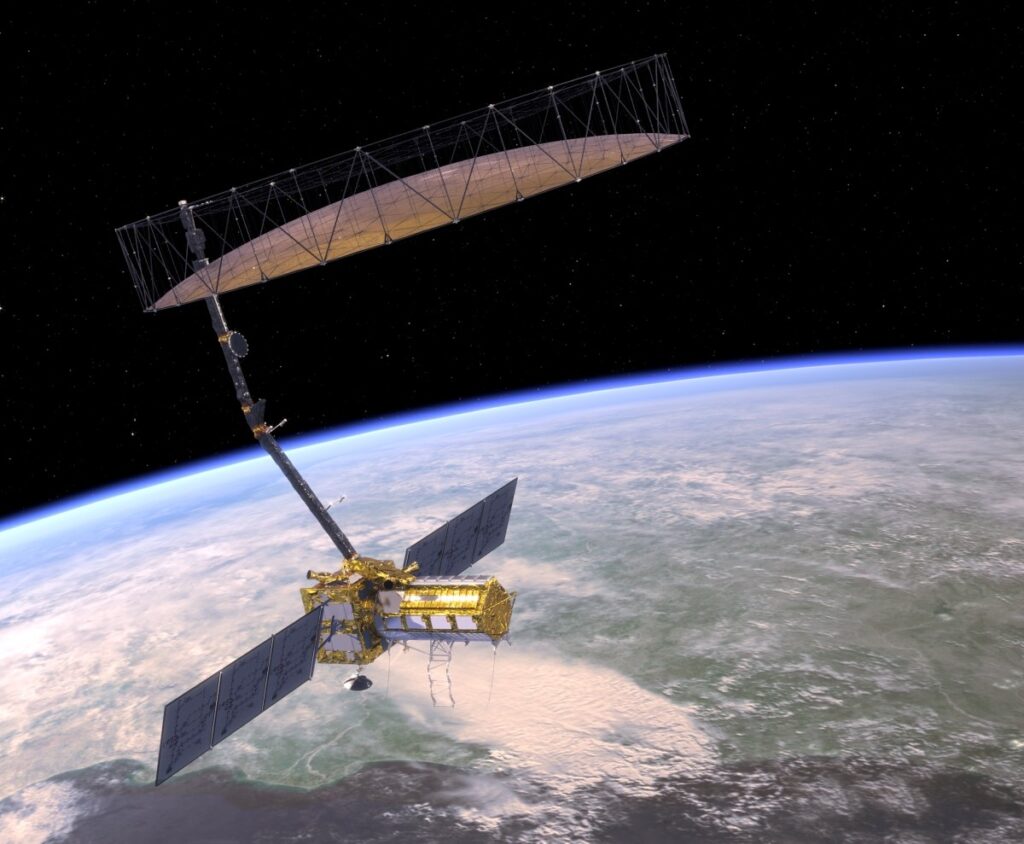

The US Division of Protection is funding loads of compare into like a flash, deepest edge computing. In March, its compare waft, the Protection Superior Compare Initiatives Agency (DARPA), announced a partnership with chipmaker EnCharge AI to bear an ultra-noteworthy edge computing chip feeble for AI inference. EnCharge AI is working to bear a chip that enables enhanced privacy but might possibly almost definitely well almost definitely operate on tiny or no power. This can bear it correct for militia capabilities relish satellites and off-grid surveillance instruments. The firm expects to ship the chips in 2025.

AI devices will repeatedly depend on the cloud for some capabilities, but unusual funding and fervour in improving edge computing might possibly almost definitely well almost definitely raise sooner chips, and subsequently more AI, to our every day devices. If edge chips salvage dinky and cheap sufficient, we’re seemingly to search out even more AI-driven “successfully-organized devices” in our properties and workplaces. This day, AI devices are largely constrained to recordsdata products and companies.

“Most of the challenges that we encounter within the knowledge heart will seemingly be overcome,” says EnCharge AI cofounder Naveen Verma. “I seek recordsdata from to search out a mountainous focal level on the edge. I accept as true with it’s going to be severe to getting AI at scale.”

Enormous Tech enters the chipmaking fray

In industries starting from like a flash fashion to lawn care, companies are paying exorbitant quantities in computing costs to bear and utter AI devices for his or her agencies. Examples contain devices that workers can use to scan and summarize documents, as successfully as externally going thru technologies relish digital brokers that can proceed you thru the correct technique to restore your damaged fridge. Meaning quiz for cloud computing to coach those devices is thru the roof.

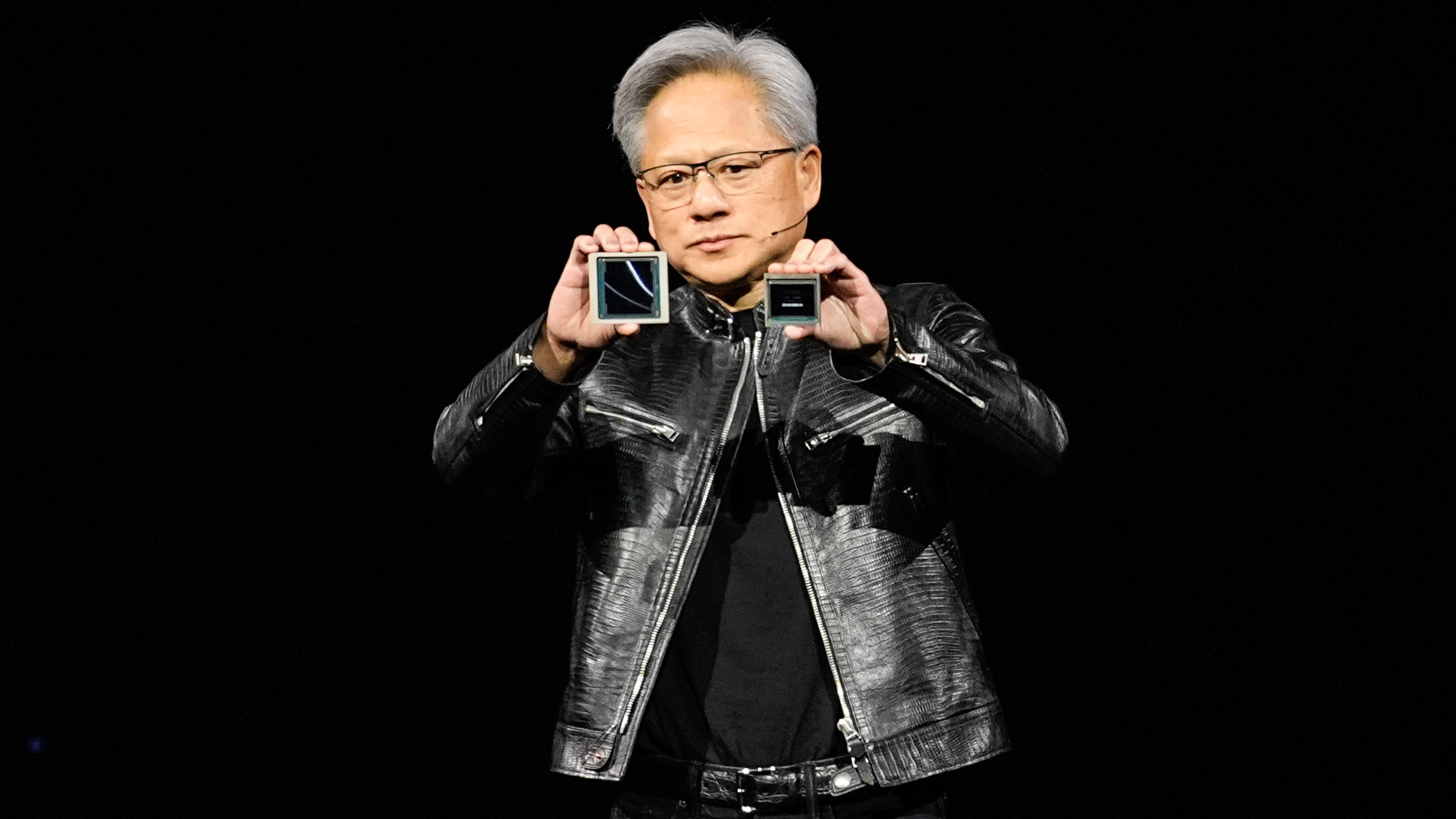

The companies providing the bulk of that computing power are Amazon, Microsoft, and Google. For years these tech giants have dreamed of accelerating their income margins by making chips for his or her recordsdata products and companies in-home relatively than attempting to search out from companies relish Nvidia, a large with a shut to monopoly on the most developed AI coaching chips and an indication greater than the GDP of 183 nations.

Amazon began its effort in 2015, acquiring startup Annapurna Labs. Google moved next in 2018 with its rep chips referred to as TPUs. Microsoft launched its first AI chips in November, and Meta unveiled a peculiar model of its rep AI coaching chips in April.

AP PHOTO/ERIC RISBERG

That pattern might possibly almost definitely well almost definitely tilt the scales away from Nvidia. But Nvidia doesn’t easiest play the contrivance of rival within the eyes of Enormous Tech: no matter their very rep in-home efforts, cloud giants quiet need its chips for his or her recordsdata products and companies. That’s partly on legend of their very rep chipmaking efforts can’t fulfill all their wants, but it’s also on legend of their customers seek recordsdata from to be succesful to utilize high-of-the-line Nvidia chips.

“Right here’s no doubt about giving the customers the different,” says Rani Borkar, who leads hardware efforts at Microsoft Azure. She says she will’t envision a future in which Microsoft gives all chips for its cloud products and companies: “We are in a position to continue our staunch partnerships and deploy chips out of your complete silicon companions that we work with.”

As cloud computing giants strive to poach relatively of market portion away from chipmakers, Nvidia is also attempting the notify. Final one year the firm began its rep cloud provider so customers can bypass Amazon, Google, or Microsoft and salvage computing time on Nvidia chips straight. As this dramatic battle over market portion unfolds, the coming one year will seemingly be about whether or no longer customers encounter Enormous Tech’s chips as equivalent to Nvidia’s most developed chips, or more relish their tiny cousins.

Nvidia battles the startups

Despite Nvidia’s dominance, there is a wave of funding flowing towards startups that fair to outcompete it in certain slices of the chip market of the future. These startups all promise sooner AI coaching, but they’ve a lot of options about which flashy computing abilities will salvage them there, from quantum to photonics to reversible computation.

But Murat Onen, the 28-one year-extinct founder of 1 such chip startup, Evawhich he spun out of his PhD work at MIT, is blunt about what it’s relish to launch a chip firm glorious now.

“The king of the hill is Nvidia, and that’s the enviornment that we’re living in,” he says.

Many of these companies, relish SambaNova, Cerebras, and Graphcore, are attempting to change the underlying structure of chips. Imagine an AI accelerator chip as often having to shuffle recordsdata between a lot of areas: a part of recordsdata is saved within the memory zone but must circulate to the processing zone, where a calculation is made, after which be saved abet to the memory zone for safekeeping. All that takes time and vitality.

Making that project more efficient would bring sooner and more cost-effective AI coaching to customers, but easiest if the chipmaker has glorious sufficient instrument to enable the AI coaching firm to seamlessly transition to the unusual chip. If the instrument transition is simply too clunky, mannequin makers such as OpenAI, Anthropic, and Mistral have a tendency to follow mountainous-title chipmakers.Meaning companies taking this suggests, relish SambaNova, are spending loads of their time no longer appropriate on chip form but on instrument form too.

Onen is proposing changes one level deeper. In build of old skool transistors, which have delivered greater efficiency over decades by getting smaller and smaller, he’s the usage of a peculiar component referred to as a proton-gated transistor that he says Eva designed particularly for the mathematical wants of AI coaching. It permits devices to retailer and project recordsdata within the the same build, saving time and computing vitality. The premise of the usage of the sort of component for AI inference dates abet to the 1960s, but researchers might possibly almost defini tely well almost definitely by no means figure out the correct technique to utilize it for AI coaching, in portion thanks to a materials roadblock—it requires a cloth that can, among other qualities, exactly support watch over conductivity at room temperature.

Within the future within the lab, “thru optimizing these numbers, and getting very lucky, we obtained the materials that we wanted,” Onen says. “All of a unexpected, the instrument is rarely any longer a science dazzling mission.” That raised the different of the usage of the sort of component at scale. After months of working to verify that the knowledge used to be glorious, he founded Eva, and the work used to be printed in Science.

But in a sector where so many founders have promised—and failed—to topple the dominance of the leading chipmakers, Onen frankly admits that it’ll be years before he’ll know if the kind works as intended and if manufacturers will conform to form it. Main a firm thru that uncertainty, he says, requires flexibility and an speed for meals for skepticism from others.

“I accept as true with most frequently folk feel too attached to their options, after which form of feel terrorized that if this goes away there obtained’t be something else next,” he says. “I don’t accept as true with I feel that means. I’m quiet hunting for folk to train us and yell this is imperfect.”

Hot Deals

Hot Deals Shopfinish

Shopfinish Shop

Shop Appliances

Appliances Babies & Kids

Babies & Kids Best Selling

Best Selling Books

Books Consumer Electronics

Consumer Electronics Furniture

Furniture Home & Kitchen

Home & Kitchen Jewelry

Jewelry Luxury & Beauty

Luxury & Beauty Shoes

Shoes Training & Certifications

Training & Certifications Wears & Clothings

Wears & Clothings